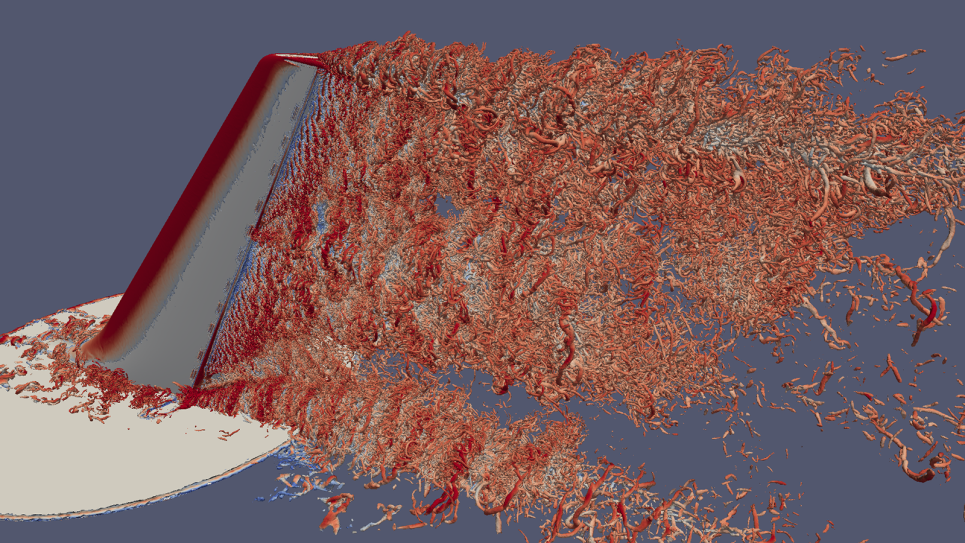

This visualization shows airflow patterns over an aircraft’s vertical tail, highlighting how advanced simulations capture complex turbulence caused by rudder movement and air jets. (Image: Kenneth Jansen, University of Colorado Boulder)

Using ALCF's Aurora exascale system, a University of Colorado Boulder-led team is combining large-scale simulations and machine learning to advance turbulence modeling and inform the design of more efficient aircraft.

With unprecedented capabilities for simulation and artificial intelligence, the Aurora supercomputer at the U.S. Department of Energy’s (DOE) Argonne National Laboratory is helping researchers explore new ways to design more efficient airplanes.

As one of the world’s first exascale supercomputers, Aurora is capable of performing over a quintillion calculations per second. Housed at the Argonne Leadership Computing Facility (ALCF), a DOE Office of Science user facility, it also ranks as one of the top systems for AI performance. A team led by researchers at the University of Colorado Boulder is using Aurora’s exascale computing power, along with advanced machine learning techniques, to study airflow around commercial aircraft, providing insights to inform the design of next-generation airplanes.

“With Aurora, we’re able to run simulations that are larger than ever before and on more complex flows. These simulations help improve the predictive models that are applied to even more complex cases, such as capturing the flow physics around a full vertical tail and rudder assembly of an aircraft at full flight scale,” said Kenneth Jansen, professor of aerospace engineering at the University of Colorado. “Our goal is to understand the fundamental physics involved and work with manufacturers to use those insights to design better airplanes.”

Traditionally, airplanes are designed to handle worst-case scenarios, such as taking off in a crosswind with one engine out. As a result, airplanes often have vertical tails larger than is needed for most flights.

“The vertical tail on any standard plane is as large as it is precisely because it needs to be able to work effectively in such a situation. The rest of the time, however, that vertical tail is larger than would be necessary, thus adding unnecessary drag and fuel consumption,” said Riccardo Balin, ALCF assistant computational scientist. “Our team thought that if we could understand the physics of the flow better, we could design a smaller tail that’s still effective in worst-case-scenario conditions.”

The team is using Aurora to run large-scale fluid dynamics simulations with HONEE, an open-source solver designed to model the complex and chaotic behavior of turbulent airflow. Their high-fidelity simulations generate training data to develop machine-learning-driven subgrid stress (SGS) models, which are a key part of turbulence models used in lower-resolution simulations. Subgrid stress models help capture the effects of small-scale turbulence that are not directly resolved by simulations but are critical for accurate airflow predictions.

Developing improved subgrid stress models can significantly reduce simulation costs while maintaining high fidelity. Ultimately, the team’s approach aims to reduce the need for costly wind tunnel and flight tests.

“While that’s a very ambitious goal, getting there involves improving the accuracy and robustness of our simulation tools, so that we can trust the results they provide, as well as leveraging near-future computing powers so as to be able to run larger, more realistic simulations of these flow geometries,” said Kris Rowe, ALCF assistant computational scientist.

Traditional turbulence models rely on extensive stored datasets and slow offline analysis. The team’s new method employs “online” machine learning during the simulation itself, saving time and bypassing the need to store massive amounts of data.

“Online machine learning refers to carrying out simulations that produce training data at the same time that the actual training task is carried out,” Rowe said.

“We’re ensuring we can examine the simulation fields in real time and extract dynamics as the simulation progresses,” Jansen explained. “On the machine learning side, we’re using those same analytics to understand how they impact turbulence models. Machine learning allows us to uncover modeling behaviors that complement and extend our current understanding.”

Using this technique, the team is building smarter models that can predict the behavior of turbulent air, especially in challenging conditions where traditional models struggle. This novel approach also enables scientists to test new ideas for real-time flow control and evaluate how smaller tail designs would perform in extreme conditions.

“Turbulence, as a very chaotic, nonlinear system, is itself highly complex,” Rowe said. “That means that correlations between inputs and outputs in a turbulence model are difficult to identify. Successful turbulence models require lots of physics embedded into them, and domain expertise is a necessity. To generate high-quality turbulence data requires running numerous high-fidelity, large-scale simulations, making even data generation itself an Aurora-scale problem.”

The team leverages a suite of advanced computational tools to enable this work at scale. Specialized software like the open-source SmartSim library streams data directly from simulations to machine learning models on the same compute node, eliminating time-consuming writes to disk and enabling simultaneous training and simulation.

“SmartSim effectively allows users to create a staging area where they can store data — say, from a simulation — without writing them to the parallel file system,” Balin explained. “It enables a collocated approach in which we can run simulation and training simultaneously within the same nodes, using the same resources. This approach ensures that data being shared between simulation and training never have to leave the node, which is essential for handling the massive data flows on Aurora.”

To carry out the large-scale numerical calculations required for these turbulent airflow simulations, the team employs PETSc, an Argonne-developed open-source toolkit that provides scalable, efficient solvers for complex scientific computing problems on supercomputers like Aurora.

By combining Aurora’s exascale computing power with these innovative tools, the team is transforming how aircraft can be designed and tested in a virtual environment, speeding up the development of next-generation aircraft while reducing the need for expensive and time-consuming physical tests.

“We work with airplane manufacturers to make sure that we study the problems most relevant to both current and future designs,” Jansen said.

The team’s work on Aurora is supported by the ALCF’s Aurora Early Science Program and DOE’s Innovative and Novel Computational Impact on Theory and Experiment (INCITE) program.